Over the span of the past 20 years the CV/ML technologies have evolved exponentially. When I built my first outdoor optical tracking systems the compute was very limited, the sensors were either very expensive or very low resolution/noisy (analog), and readily available software for real-time tracking was virtually nonexistent. Back then, even common algorithms (like the calibration of camera intrinsics and extrinsics) typically had to be designed and developed from scratch.

Over the scope of the past 25 years I have worked on many computer vision projects. These include my very first pioneering Speedway Tracking System prototype in 2002-2003, the Greyhound Tracking System pilot, systems for association football (including a system that was used in the Premier League 2010-2013, and piloted with Setanta Broadcasting in 2009), a CV project for Formula One, several kinds of tracking subsystems for VR headsets, and so on. Depending on how you count, I’ve shipped at least 11 major CV projects and worked on dozens of smaller subprojects over the years.

I’ve also learned an untold amount of valuable lessons over the years. Many of these lessons are still as true today as they were 20 years ago. I love to share advice on CV topics with customers and colleagues, and although many of the core lessons and concepts are obvious and well-known (such as the importance of data quality, achieving robustness to variability, etc) there are also some hard-fought personal lessons which I find myself talking about. This article will be exactly about those – the “probably-not-in-your-textbook” Top 5 lessons based on my experiences with shipping CV products!

Lesson 1: If the problem is hard, start with your Plan B

Lesson 1 applies to any circumstance where you are trying to solve a “hard” problem. Maybe even one which has never been solved before!

Sure, you have your ambitious Plan A which is the “best” way of solving the problem. Sure, you have over a couple months to make it happen, and so you can afford to take the technical risks. And yes, if all goes well there should be no problems, right?

If you ever find yourself reasoning along those lines, then before you proceed with your ambitious 2+ month project I highly recommend that you stop and at least consider the alternatives:

- If you only had 2 weeks, how would you solve the problem?

- If you only had 2 days, what would you do instead?

If you can think of any quick and simple solution which should kinda work – no matter how “unsophisticated” it may seem – get it done first before you do anything else! That way you have something in the bag straight away (which unblocks other dependencies such as internal API availability) and you can then focus on your more ambitious Plan A without a ton of extra stress when the deadline draws closer! But most importantly, by implementing the quick initial solution first you can often learn valuable lessons which will also help you in turning your Plan A into reality.

For example, when reflecting on ways to implement your system architecture, maybe you’ll find that it’s possible to hack together a quick-and-dirty end-to-end prototype by using various off-the-shelf HW modules and CV libraries. If that’s the case, then what are you waiting for? Start hacking together the prototype immediately, collect the learnings, and only worry about doing it “correctly” afterwards!

Update (Late 2025): The “2 weeks / 2 days” heuristic needs recalibrating. With current AI coding assistants and off-the-shelf vision models, your “2 day” fallback might now be a “2 hour” fallback – a quick prototype using a foundation model API or an open-source detector fine-tuned with synthetic data. This makes the lesson more important, not less: if you can validate the core idea in an afternoon, there’s no excuse for spending a month on Plan A before checking whether the problem is even worth solving.

I learned this lesson completely the hard way as I was building my first outdoor tracking system (i.e. the Speedway Tracking System back in 2003)! I was working hard towards an all-important investor demo and had initially decided I wanted to present a fully functioning tracking system to the investors as my Plan A. As the deadline was approaching this goal started to look more and more infeasible. At the very last minute (specifically on the night before the demo day!) I made a desperate Plan B pivot to instead demo and visualise all the components that I had already managed to get working by then. The pivot worked! One sleepless night later I finished my Plan B a few minutes before the investors and trial bikes showed up, the demo went great, and we clinched the funding! What’s more, just by having better visualisations in place I also learned some valuable lessons which helped me eventually complete my Plan A objectives. Ever since that experience I have thought long and hard about scoping CV projects – and what my Plan Bs and even Cs might be for any given project deadline – before tackling head-on “never before solved”-type problems. Be smart, don’t become the person who spends the entire night before the deadline programming in a dark, cold and unheated Speedway race track tower!

That experience and lesson leads me directly to..

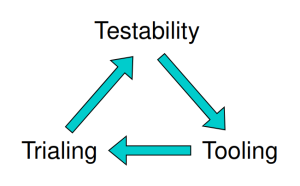

Lesson 2: The “Three Ts of CV”: Testability, Tooling, and Trialing

What’s the difference between CV research and CV product delivery? In the research phase work often hovers around prototyping architectures and benchmarking algorithms & approaches for solving the problem. When work enters the product delivery phase I’ve found 3 interlinked concepts critical for success, namely Testability, Tooling, and Trialing.

- Testability – the more complex the system is, the more critical it is to be able to split it into smaller parts that can be tested and proven out individually. Ideally this is already thought of as early as possible when mapping out the system architecture. But, one way or another, you need the ability to isolate your components, otherwise you end up with a hard-to-debug black box system with compound errors!

- Tooling – although it often takes time to develop, good tooling for visualising the (sub)system state – and more importantly, debugging it – is a critical investment for success. The difference between a CV amateur and a CV pro is that the amateur only starts work on such tooling when it’s absolutely necessary (at which point a lot of time has already been spent on inefficient debugging), whereas the pro invests time on this from day 1. Note that this can also make the pro look less effective at first because of the Pareto principle, but getting a CV system to near-100% quality without good tooling is simply not going to happen! You have to choose between looking impressive on week 1 vs looking impressive 1 week before your deadline.

- Trialing – what this means is case-dependent, but think of it as getting your sensors (and ideally an early version of your product!) into the right environment for data collection and product trials. For a system to perform robustly in the real world, diverse and large enough datasets must be captured. It is the outcomes and learnings from these events which will influence general development (and confirm whether or not system testability and tooling are of a sufficient level). If you are developing an autonomous vehicle, for example, then you must be able to capture footage from the operating environments with diverse illumination and operating conditions. If you are building a markerless motion capture rig, then you must engage a diverse range of individuals with different body shapes, ethnicities, hair styles, accessories, and so on.

Achieving good initial plans for all 3 will help predictability of development significantly, and learnings from each step will feed into the next iteration!

Lesson 3: Ground truth is important, but so is the acquisition cost!

This lesson applies to any case where there’s non-trivial cost and/or complexity in acquiring data, labeling and “ground truth” for proving out your algorithms.

For computer vision and machine learning the importance of recording data and ground truth is well understood. At a high level the ground truth data allows you to benchmark how well your algorithms work (and thus judge how close you are to a shippable product), and help you make evidence-based decisions regarding where to focus your engineering time next.

What’s sometimes less obvious is how to balance the tradeoffs of the quality of ground truth vs the cost (time, money, etc) of acquiring it.

When developing a CV system from scratch (so-called 0-to-1 projects), it typically makes sense to start off with simple datasets and ensure the system works well with “perfect inputs” (remember Lesson 2 about testability!). Luckily the cost of ground truth would typically also be low for this phase, as simple jigs confining movement paths or simulations with artificially perfect data should be relatively cheap and easy to make (plus you might be able to obtain some existing labeled datasets off the Internet these days, or be able to obtain transfer learning from existing ML models).

It also often makes little sense to add complexity (and execution duration!) to the tests and system until the simple cases can be proven to work correctly. For example, if you are developing a SLAM solution it would make sense to start off with simple test cases where your sensor(s) travel along singular axes for a known distance (e.g. 1 meter) per test case. If you are developing a people tracking solution then ask your test subjects to follow a straight line and stop at pre-measured intervals (e.g. every two meters), and so on.

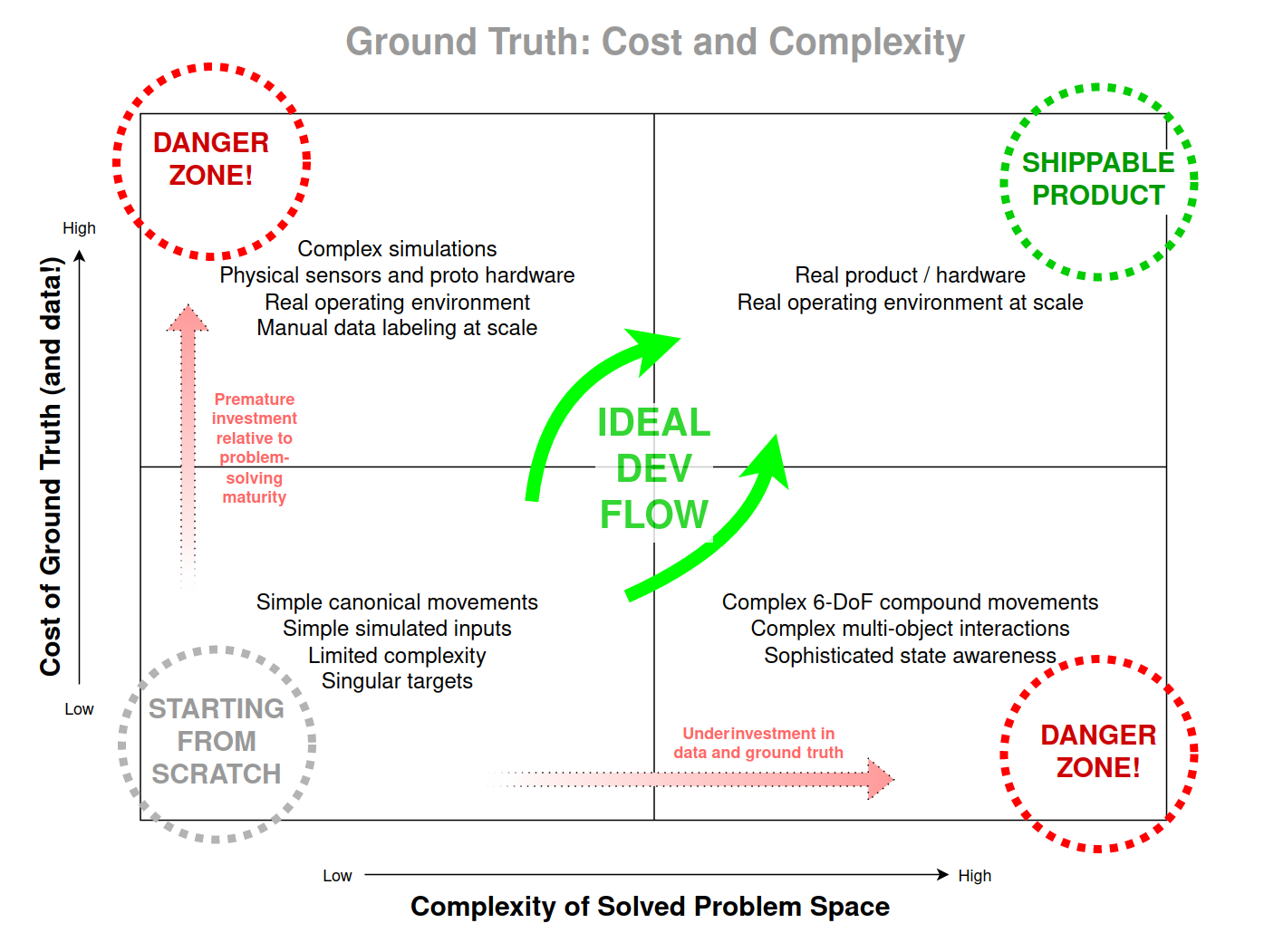

Only when your algorithms are proven to behave robustly with such simple canonical motions does it make sense to attempt to tackle more complex cases (multi-target, 6 DoF, large environment, etc). At this point in time it is also important to ensure your investment in data collection – and often the more expensive ground truth – will not lag behind. In the chart above there exists two distinct “Danger Zones”:

- Danger Zone 1 (Top left corner) – Data collection and ground truth investments are premature relative to algorithm maturity. When this happens, there is a high risk that some of the investment turns out to be wasted (for example, tooling is developed and data collected & labeled for some purpose which later turns out to be irrelevant or unnecessary, whilst the data which would have been important wasn’t even thought of). Proceed down this path with caution!

- Danger Zone 2 (Bottom right corner) – Underinvestment in data collection and ground truth. When attempting to increase algorithmic complexity without ground truth and data which matches the actual target use case, the developers run a high risk of making incorrect decisions (technical and/or in terms of where they invest their time). Avoid ending up here at all cost!

It is also important to remember that as you scale your data collection and ground truth acquisition effort the processing cost of the bigger and more complex CT or MLOps pipeline also tends to go up. So, start simple, prove out your algorithms and architectures, and build from there!

Update (Late 2025): The ground truth acquisition landscape has shifted significantly since the above lesson was written. AI-assisted annotation tools (Segment Anything Model and derivatives, auto-labeling pipelines, synthetic data generation) have dramatically reduced the cost of initial labeling passes. The tradeoff calculus described above still applies, but the “expensive ground truth” phase now often means human review and correction of AI-generated labels rather than annotation from scratch. The core lesson – match investment to algorithm maturity – remains valid, but the absolute costs have dropped substantially for many common object classes.

Lesson 4: Scoping – if it’ll take more than 6 months to complete, split the project!

Let’s say you have drawn up your CV development plan and it looks like it’ll take 11 months to complete it. Great, now it’s time to split it into two or more subprojects!

For example, it could make sense to split it into a 5 month research project (with the objective of creating a fully working proof of concept which can be used for demoing to prospective customers) followed by a 6 month product delivery phase (which will ship the actual product). The phases may require quite different approaches – often also expertise – for success, and so already that fact alone means that it makes sense to plan and resource them as separate subprojects! But in short, the more complex a project is (such as a multi-year higher level ADS project), the more important it becomes to split it into meaningful shorter subprojects with tangible objectives for each.

The reasons for splitting are simple:

- Planning and budgeting your product delivery stages can be difficult before you have completed your research project(s) and collected the necessary learnings. Conflating your research, prototyping and product delivery will result in a muddled roadmap (which you’ll be rewriting every month) – keep these separate!

- Optimal talent allocation – some people are better at prototyping, coming up with innovative designs and solutions, and other people are better at grinding products out the door. Help your team to succeed by playing to their individual strengths!

- Keeping up with the competition. CV technologies and the marketplace evolve rapidly. If you are in a particularly competitive field, it could well be that if you can’t ship something tangible within 6 months, you won’t ship at all!

In short, be agile! If the (sub)project looks like it’s too big, it’s time to split it up into more concise parts with tangible and more easily measured outcomes!

And unless you are working on a problem that you’ve solved in the past, then carrying out a research project which can serve as a proof of concept (and feasibility study) first is the only way to create a reliable product delivery roadmap (with a budget and deadline that you can commit to!)

Update (Late 2025): The 6-month rule was conservative in 2024. It’s now aggressive. Foundation model capabilities are shifting quarterly – a project scoped in January may be solving a problem that’s commoditised by June, or using an approach that’s been obsoleted by a new release. For anything touching vision or language, 3-month milestones with explicit “is our technical approach still correct?” checkpoints are closer to the right cadence.

Lesson 5: It’s not just about CV – don’t forget about the business case!

I have had a share of projects which were technical successes, even shipped on time, but ultimately struggled to find a product-market fit.

Sometimes this just comes down to underestimating technical progress – the business idea may have made sense to “everyone” at the time when the project was conceived, but two to three years down the road the idea gets disrupted to obsolescence.

What’s worse, the reality of computer vision, machine learning and “AI” technology is that the market and the state of the art move fast (or indeed faster, as per Ray Kurzweil’s prescient The Law of Accelerating Returns and its concept of waves of converging exponential technologies!). Keeping your project scoping short and effective helps you stay aligned while you hone your product vision.

Indeed, often the best approach for making sure your CV objectives and business objectives remain aligned to the marketplace realities is to speed up your learning cycles! This requires sensible scoping (Lessons 1 and 4) and great project execution (Lessons 2 and 3). When combined with some great product management instincts you’re well on your way to riding your next wave of success!

Conclusion

As with most advice, my personal Top 5 is a generalisation, and every CV project has its case-specific considerations.

That said, I’d argue these lessons matter more now than when I first learned them. The current wave of foundation models and AI tooling has collapsed the time from idea to working prototype – what once took months now takes days. But that acceleration makes the gap between “demo” and “product” more dangerous, not less. It’s now trivially easy to get impressive results on curated inputs, which means the discipline of rigorous testing, proper ground truth, and honest scoping is the main thing separating teams that ship from teams that chase diminishing returns on increasingly narrow benchmarks.

The hard problems haven’t gone away. They’ve just moved downstream – into deployment, reliability, edge cases, and operational reality. The teams winning in 2025 and beyond aren’t the ones with the cleverest models; they’re the ones who understood Lessons 2 and 3 well enough to build the infrastructure that lets them iterate fast when the model meets the real world.